Hello,

Despite not doing dual narrowband imagery with OSC anymore, I had recently a discussion with a friend on the ways to add the green and blue channel in order to get the full Oiii signal when shooting this way. I was shown some recent videos where this topic was approached but, in my view, in a fairly simplistic way.

Being math inclined, I decided to try to understand if it is indeed even possible to get something better than the signal-to-noise ratio (SNR) of the green channel and I am sharing my approach, as well as some hints of a practical approach.

But first, some premises which I think are correct:

1. The Oiii signal is spread between the blue and green channel, since its wavelength is simultaneously captured in these channels in a typical OSC

2. The blue channel in dual-NB imagery is always noisier than the green channel

3. The blue channel in dual-NB imagery has always less signal than the green channel

4. The SNR of the green channel is always greater than the blue channel

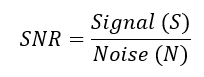

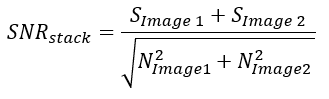

The SNR of an image is defined as

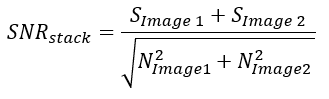

When summing two different images (1 and 2), the resulting SNR (stacked) is the following:

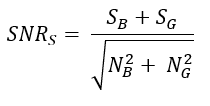

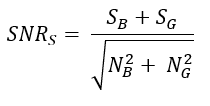

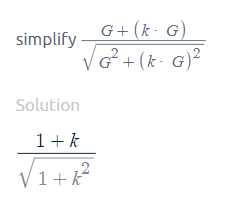

Applying the same to our green (G) and blue (B) channel, we have

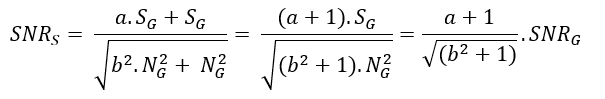

If we define the signal and noise of the blue channel are a given factor of the green channel, like the following

SB = a.SG and NB=b.NG

then we will have

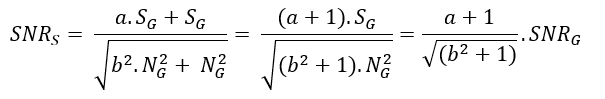

For the stack SNR to be better than green channel SNR, the term  needs to be larger than 1. Therefore: needs to be larger than 1. Therefore:

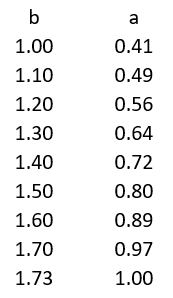

What does this tells us? It tells us there are certain conditions where it is possible to improve the SNR of the stack – but not every condition. Which conditions are those? Let’s simulate – next table represent the minimum value of a needed for each b:

So, if you have an image where the blue channel is 50% noisier than the green (b=1.50), then the signal in the blue channel needs to be at least 80% of the green channel (a=0.80) in order to improve SNR of the stack against SNR of the green. Less than that, the combined image will have worse SNR than the green one alone.

Why these values in the table? Well, since in dual-NB imagery the blue channel is always noisier (b > 1) and the signal is always lower (a < 1), then:

b is always smaller than 1.732, otherwise a gets larger than 1 and

a is always larger than 0.414, otherwise b gets smaller than 1

Now, this is all very fine but can we anticipate if we will get a better image or not? Not really because it is not easy to find the numbers in coherent units to plug in the formula and besides, the main point is that there is no one size fits all solution, contrary to many videos available. But there is a quick way to confirm if combining the channel yields a better result or not, namely:

1. Combine the green and blue channels in whichever way you want;

2. In PixInsight, run SNR script (under Scripts > Image Analysis) to the three images;

3. Compare which one has the larger SNR and use it.

In my limited number of tests, I’ve always found that the best approach is simply dropping the blue channel and keep the green, the same approach as @Luke Newbould. But who knows whether something different will work for somebody else.

Anyway, here’s my two cents about this topic, which hopefully will be helpful to someone. If someone sees any mistake in the reasoning, feedback will be most welcome.

Cheers!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

I saw I'd been tagged in a post so thought I'd take a little look, - Crikey! That's one impressive post @Andre Vilhena !!

Your understanding of the underlying math at play here is truly leagues beyond my own, so thank you for applying your talents to this problem!

Your conclusion sums it up nicely, and definitely aligns with my own findings gathered from simple trial and error observations - while there's no 'one size fits all' solution, simply ditching the blue comes fairly close for me! :-D

Thank you again for taking the time to share this, genuinely - the more experimentation the better I think, be it theoretical or practical - the more people testing these things and independently reaching similar conclusions lends a degree of robustness to findings!

Best,

Luke

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Something is off here because let's say I have 10 frames and then shot 1 more, that 1 additional frame of normal quality will always have less signal and more noise than the stack but you'll always stack 11 and be better off.

Also Image Integration, in my experience, assigns about .67 weight to blue in integration, which absolutely makes sense and is consistent with the way we handle that channel.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Luke Newbould:

Your conclusion sums it up nicely, and definitely aligns with my own findings gathered from simple trial and error observations - while there's no 'one size fits all' solution, simply ditching the blue comes fairly close for me! :-D

Hi @Luke Newbould,

Thanks for the feedback. Indeed I tagged you because I saw a recent comment of yours to a YT video on the topic, stating exactly the question of summing up the noise too. Hence, decided to share these comments with you...

In my case, I also found it doesn't pay up to sum the channels and ditching the blue is the way to go.

Cheers,

André

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Hello @Rafał Szwejkowski,

Thanks for your comments.

The conclusion does not contradict your first paragraph. What I conclude is that there are certain conditions where stacking two images will not yield a better SNR than the SNR of the best image.

If you have several images (say n images) with the same SNR, then the SNR of the stack will be given by

SNR(stack) = SQUAREROOT(n) x SNR(subframe)

In other words, the SNR of the stack will improve as n (number of subframes) increase. But if you add to the stack poor images, then the SNR of the stack may lower - and that is why that you assign weights and rejection thresholds to to the subframes.

In the middle, there may be frames that are not as good but net effect is not negative.

Cheers,

André

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

This certainly agrees with my experience in practice when trying to combine OSC NB data, albeit admittedly without having worked out the mathematics of it!

I recently did some testing of the various ways of combining the B and G channel data (L-Extreme / 533MC) and found that when appropriately weighted (1 v 2 pixels / accounting for differing QE values) the SNR of the combined Oiii data was only very slightly (~0.1db) higher than just the G channel data.

I also investigated the possibility of trying to recover additional Sii signal from the B & G channels and came to the conclusion that all sensible combinations / weightings always tended towards the R channel alone being the best SNR. I guess this explains why! I'd originally, naively, assumed that since the 533MC has ~5% B and ~25% G sensitivity at Sii I might be able to combine them with an appropriate weighting.

It does beg the question however, would it be possible to come up with a sub-frame weighting/rejection formula to reject frames that would make the overall stack worse, opposed to having to guess at fixed ratios and hard cut-offs for various parameters. I guess you could use the SNR of the "median" sub-frame as a seed (since you wouldn't know the SNR of the overall stack at this point)?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

It's very interesting trying to analyze this topic from a math perspective, but we also need to understand what formulae are we using, or where this formulae come from. When combining only two images (in this case a Blue and a Green Channel), each one with a defined signal and noise amounts, the formula for adding the noise it's not the one you're using for calculating SNR (the root of the sum of squared noises). Why not? Because that formula is a description of a statistical distribution that will only make sense when adding (stacking) an important amount of samples, not just two.

Then, how to add the noise of only two images? We can't do it with a fórmula. But we can combine the images and compare, just like you mentioned at the bottom of the post. IMHO, that's the only way.

Of course, I might be wrong. Just sharing my point of view.

My final thought is: get a mono cam!  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Why bother doing any kind of channel manipulation in the first place? The OSC is already capturing the Ha and O3 photons into the RGB channels. You don't need to do anything to combine them. It's already done via whatever de-mosaic algorithm you use. Sure, the process interpolates the missing values, but algorithms like VNG make very _good_ guesses at what a pixel's value should be.

All OSC camera sensors have spillover in pixel response. Even though your filter has narrow bandpasses of light around Ha and O3 wavelengths, that light is getting picked up by R, G and B pixels. You can't say "red is Ha". No it isn't. Red pixels also picked up O3. Similarly, green and blue pixels are picking up Ha. Thus, you can't say "green is only O3". If you want to ensure Ha goes to R only and O3 goes to G and B only, use a mono camera with dedicated filters.

Don't get me wrong... there are plenty of fun things you can do with your data. Bill Blanshan's pixel math scripts are a great example. Manipulate channels to your heart's content - it's your data and your image. Have at it.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jonny Bravo:

Why bother doing any kind of channel manipulation in the first place? The OSC is already capturing the Ha and O3 photons into the RGB channels. You don't need to do anything to combine them. It's already done via whatever de-mosaic algorithm you use. Sure, the process interpolates the missing values, but algorithms like VNG make very _good_ guesses at what a pixel's value should be.

All OSC camera sensors have spillover in pixel response. Even though your filter has narrow bandpasses of light around Ha and O3 wavelengths, that light is getting picked up by R, G and B pixels. You can't say "red is Ha". No it isn't. Red pixels also picked up O3. Similarly, green and blue pixels are picking up Ha. Thus, you can't say "green is only O3". If you want to ensure Ha goes to R only and O3 goes to G and B only, use a mono camera with dedicated filters.

Don't get me wrong... there are plenty of fun things you can do with your data. Bill Blanshan's pixel math scripts are a great example. Manipulate channels to your heart's content - it's your data and your image. Have at it.

There's a tread on Cloudy Nights discussing this very topic:

https://www.cloudynights.com/topic/682340-monochrome-vs-one-shot-color-–-by-the-numbers-please/page-2

Jdupton proposes a specific weighting methodology to capture the RGB contributions to Ha and OIII when collecting these data through a dual band filter with an OSC. The methodology is based on accounting for the total photon flux received by the sensor through its color filter and then apportioning these photons appropriately to create Ha and OIII defined by the dual band filter location and filter widths. For example using an LeNhance filter and a Sony color sensor the mix of RGB one would use to create OIII and Ha is given by jdupton's methodology as:

Ha = .92*R + .07*G + .03*B

OIII = .05*R + .60*G + .51*B

You can download the spreadsheet jdupton has put together from the thread and play with various filter/sensor configurations.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Tom Boyd:

Jonny Bravo:

Why bother doing any kind of channel manipulation in the first place? The OSC is already capturing the Ha and O3 photons into the RGB channels. You don't need to do anything to combine them. It's already done via whatever de-mosaic algorithm you use. Sure, the process interpolates the missing values, but algorithms like VNG make very _good_ guesses at what a pixel's value should be.

All OSC camera sensors have spillover in pixel response. Even though your filter has narrow bandpasses of light around Ha and O3 wavelengths, that light is getting picked up by R, G and B pixels. You can't say "red is Ha". No it isn't. Red pixels also picked up O3. Similarly, green and blue pixels are picking up Ha. Thus, you can't say "green is only O3". If you want to ensure Ha goes to R only and O3 goes to G and B only, use a mono camera with dedicated filters.

Don't get me wrong... there are plenty of fun things you can do with your data. Bill Blanshan's pixel math scripts are a great example. Manipulate channels to your heart's content - it's your data and your image. Have at it.

There's a tread on Cloudy Nights discussing this very topic:

https://www.cloudynights.com/topic/682340-monochrome-vs-one-shot-color-–-by-the-numbers-please/page-2

Jdupton proposes a specific weighting methodology to capture the RGB contributions to Ha and OIII when collecting these data through a dual band filter with an OSC. The methodology is based on accounting for the total photon flux received by the sensor through its color filter and then apportioning these photons appropriately to create Ha and OIII defined by the dual band filter location and filter widths. For example using an LeNhance filter and a Sony color sensor the mix of RGB one would use to create OIII and Ha is given by jdupton's methodology as:

Ha = .92*R + .07*G + .03*B

OIII = .05*R + .60*G + .51*B

You can download the spreadsheet jdupton has put together from the thread and play with various filter/sensor configurations.

Thanks for pointing out that thread... I'll have to read through it. John does some pretty detailed analyses. I've worked with him in the past to produce some data on the 294MM Pro regarding short exposure linearity. Maybe he (or someone in the thread) provides a good reason for manipulating the data to create these new representations of Ha and O3. I don't understand why you'd want/need to, though. You already have a spread of Ha and O3 across the R, G and B channels - the OSC sensor gave it to you.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jonny Bravo:

You can't say "red is Ha". No it isn't. Red pixels also picked up O3. Similarly, green and blue pixels are picking up Ha. Thus, you can't say "green is only O3".

@Jonny Bravo it will depend on how much signal the microfilters will let in. For example, an ASI 2600MC will convert less than 5% of the incoming Oiii on the Red pixels and about 20% of incoming Ha into Blue and Green channels (5% in blue and 15% in green) - that's not much and still does not affect the final conclusion.

There are lots of tutorials or so claiming that, since Oiii is picked in the green and blue channel, if you somehow add both channels you'll get the full amount of Oiii picked up by the camera (thus having a stronger SNR). The point of what I wrote is that it may not be always true - if the SNR of blue channel is way worse than the SNR of the green channel (as it usually is), then it is likely that combining them won't give you a better result than just the green channel alone and, probably, you'll get a worse result in terms of SNR.

Just that.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Andre Vilhena:

it will depend on how much signal the microfilters will let in. For example, an ASI 2600MC will convert less than 5% of the incoming Oiii on the Red pixels and about 20% of incoming Ha into Blue and Green channels (5% in blue and 15% in green) - that's not much and still does not affect the final conclusion.

But why go through all the rigamarole of doing these channel mappings and pixel math conversions in the first place? That dual bandpass filter has let through only Ha and O3 light. It's spread across three channels: R, G and B. If you want to do an HOO image... you already only have H and O light captured and it's already mapped. You don't then need to manipulate the data to say... OK, well, I'm going to call the red channel Ha and then I'm going to call the green channel O3... and I'm going to just throw away the blue channel... then I'm going to make this entirely new image mapping Ha to red and O3 to both green and blue.

Maybe I'm just dense... I can accept that conclusion, too  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

@Jonny Bravo I think we are talking different things here...

The rigamole you mention is advocated by a lot of people to make HOO images: instead of mapping O3 to blue and green or mapping just to green, the theory goes that if you combine the O3 contained in the blue and green channel together, you'll get an improved image to be used in HOO combination.

My point is exactly that that might not be true in all cases and therefore there's no need to do any combination - in those cases, using just the Oii captured in the green channel (higher SNR) may be the best approach. And it is possible to use one PixInsight tool to confirm which situation is best.

Cheers,

André

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

For me personally I tend to split the channels and do a recombination that's not represented with traditional HOO colouring, instead I create a false 'hubble palette' and process that way, simply because I think it looks prettier!

In the situation where you've split the channels for processing and have them in front of you - a strong red, strong green and relatively weak blue, then trying to make use of the blue channel is akin to stacking good data with bad data during integration - data with clouds in it, or moonlight for example - basically always better to discard frames like that, right?

Cheers! 👍

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Interesting thread.

Since I just bought an L-eXtreme to use in combination with my DLSR, I'm curious to experiment with the blue and green channels!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

I like Bray Falls' recent youtube video on the topic. It's easy and he explains it pretty well in a way that makes sense.

https://www.youtube.com/watch?v=X807ulwfu5g |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

@afd33 It's easy and he explains it pretty well in a way that makes sense.

Hi,

It may be easy but the issue with that tutorial is that he only tells part of the story - the signal. In what concerns signal, he's right.

But he doesn't talk about the noise: at the same time you're adding a bit of signal to the green channel, you're also adding the noise of blue channel, which typically is larger than the one in green channel.

So, you are adding a good thing (signal) and a bad thing (noise). What's the outcome? It depends. But surely it may not be always better, like conveyed in that video.

Cheers,

André

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

@Andre Vilhena

In your analysis you're combining the B and G channels and assuming differing scaling factors for both Signal and Noise

SB = a.SG and NB=b.NG

I don't think that's exactly accurate. The noise term in the S/N is always sqrt(N), where N is the ADU count of a pixel, due to the Poisson nature of measuring light/photons.

So the brighter the pixel the greater the SNR.

https://youtu.be/3RH93UvP358?t=2138

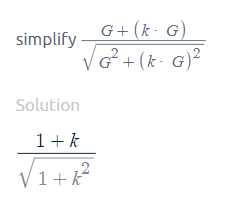

Going forward let G = the ADU count of the green pixel and B = the ADU count of the blue pixel. From this we have:

SNR(G) = G / sqrt(G) ; doubles every 4x increase in G

Now let's consider what happens to the ADU count and noise if we add the B and G together:

new ADU count: G + B

new noise: sqrt(G^2 + B^2)

This means that the SNR of the combined image becomes:

SNR(combined): (B + G) / sqrt(B^2 + G^2)

https://youtu.be/3RH93UvP358?t=2472

Now let's consider what happens if B is related to G by a scaling factor 'k':

new ADU count: G + (k * G)

new noise: sqrt(G^2 + (k * G)^2)

SNR(combined): G + (k * G) / sqrt(G^2 + (k * G)^2)

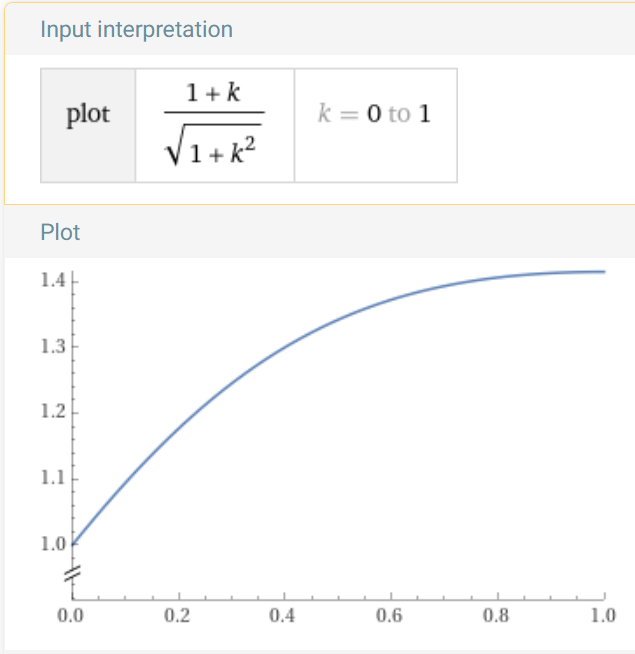

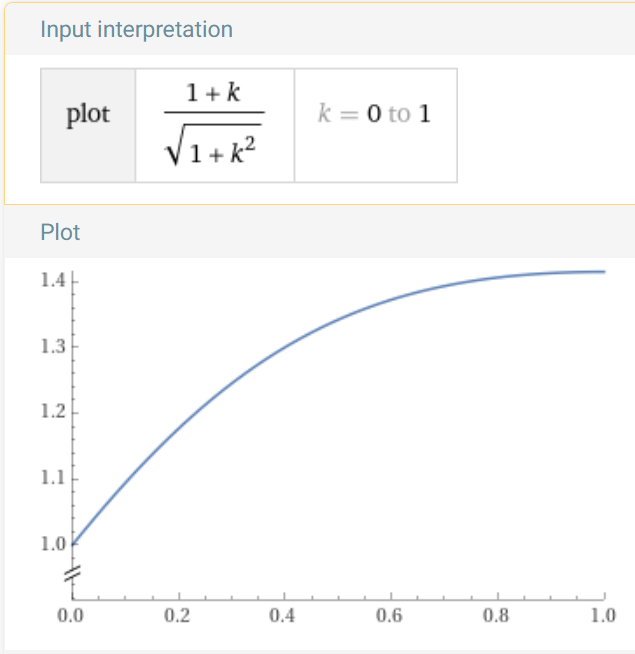

SNR(combined): (1 + k) / sqrt(1 + k^2) ; note this is independent of actual ADU values

SNR(combined) will be up to 1.41x greater than SNR(G) as 'k' goes from 0 to 1 ; note: 2 / sqrt(2) ~= 1.41

Now let's consider the Sony IMX571 color sensors QE curves:

https://www.cloudynights.com/uploads/monthly_01_2021/post-324590-0-51288200-1609989067.png

You can see from the chart that the G channel is about 85% and the B channel is about 50% which gives a B->G scaling factor of 50/85 = 0.588 which leads to a SNR increase of 1.588 / sqrt(1.766) ~= 1.19x at that wavelength on that sensor.

Note:

Now when you add G and B together you have to be careful to first remove any 'pedestal' from each color before adding them together.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

to create to post a reply.