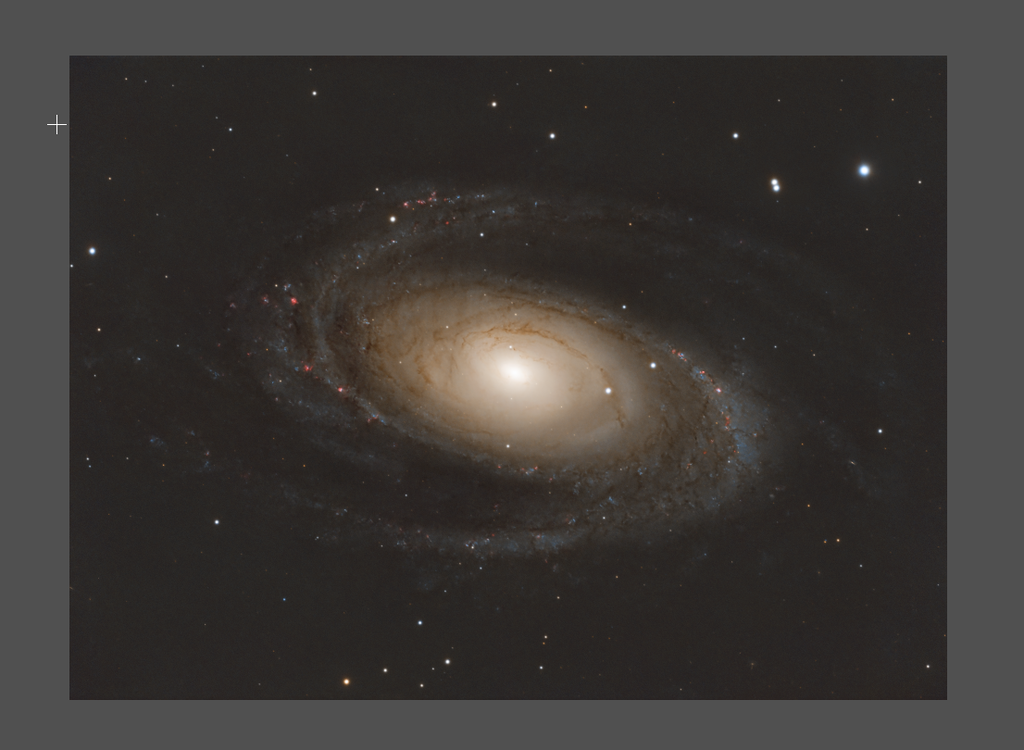

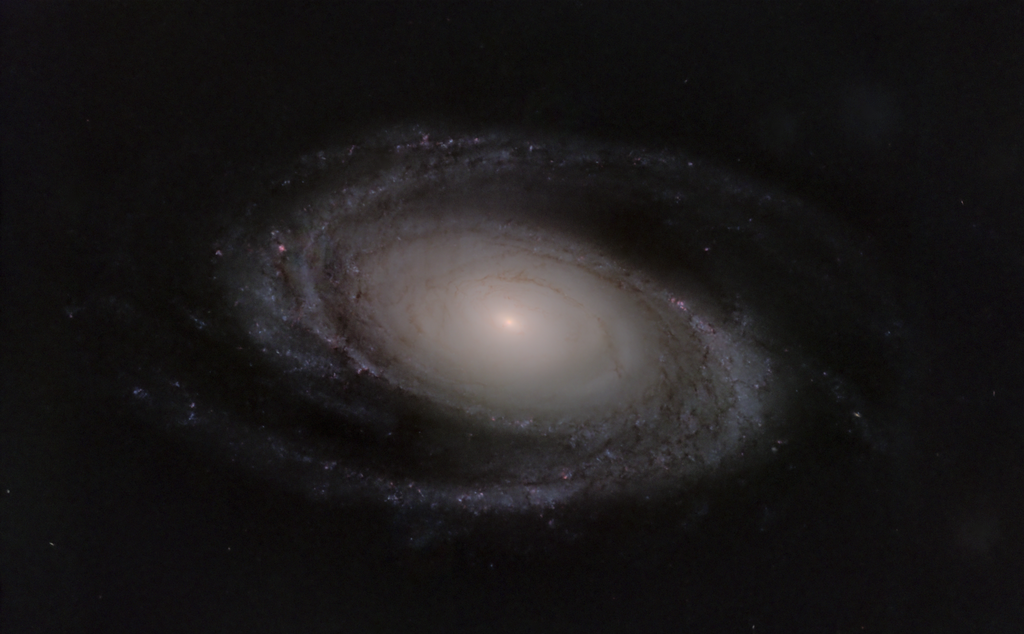

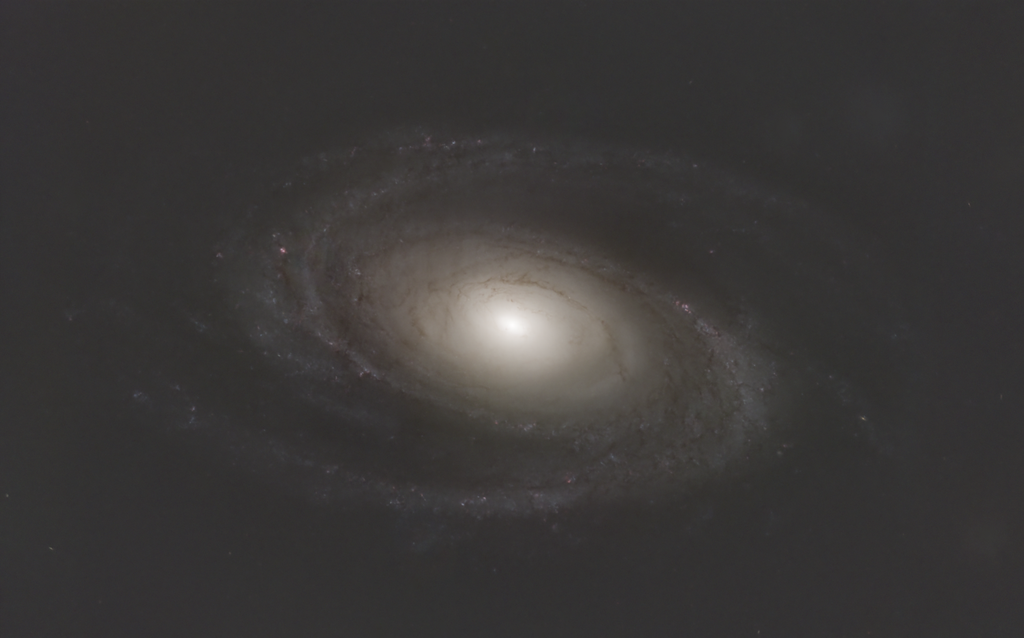

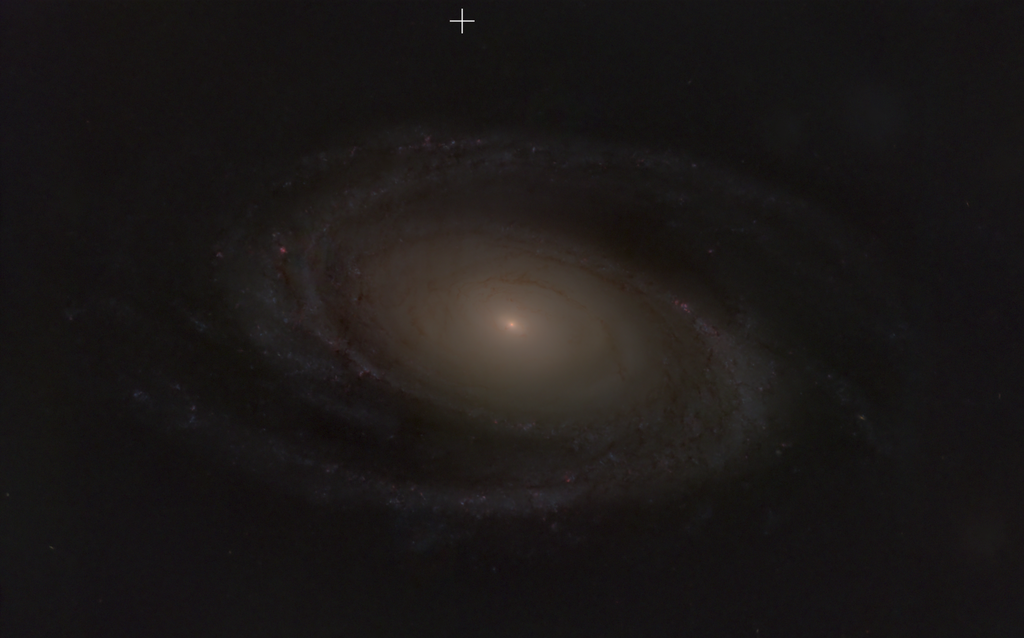

After swapping the B and G channels over I did a quick edit to see how it would turn out. This is by no means a end result as more careful processing could be done with GHS and HDRMT - I just dragged some pixelmath stretching onto it basically. It's quite possible to get something decent from the data.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Gary Imm:

Wow, Jan Erik, you may be right! Working with Arny's masters, I could never achieve a decent color-calibrated image. I told Arny that I felt there was a calibration issue somewhere in the process. But now switching those 2 channels made all of the difference. Only Arny will know if that is the case, but it is the best explanation so far.

Jan Erik, how did you decide to try that?

I just had a hunch really based on the strange purple hue in the core, the R channel looked right so I had a comparison view of the labelled G and B to see which showed the strongest signal in the core (as it should be more red and green than blue). The channel labeled G has more dust lanes and a bit weaker core as far as I could see. So when the stronger signal gets put into the blue channel it owerpowers the green and it gets purple in stead.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

|

Thanks Jan Erik! Impressive hunch.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Yeah Blue and Green are swapped.

To add, it appears that there is a flat over correction as well as failure to remove some dust motes. Over correction usually occurs when there is a gain/bias mismatch across the the flats, bias and lights. Dust motes can occur if the camera has been removed and new matching flats have not been correctly applied.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Die Launische Diva:

Hi Arny,

how does a simple RGB integration looks like?

By simple I mean just combine the R, G, B channels with ChannelCombination, remove gradients with the new GradientCorrection tool and perform SPCC with background normalization. Probably you can reverse the order of GC/SPCC as we still wait what is the best practice on using SPCC with GC but this won't matter much. At the moment it is important to follow a rudimentary processing workflow.

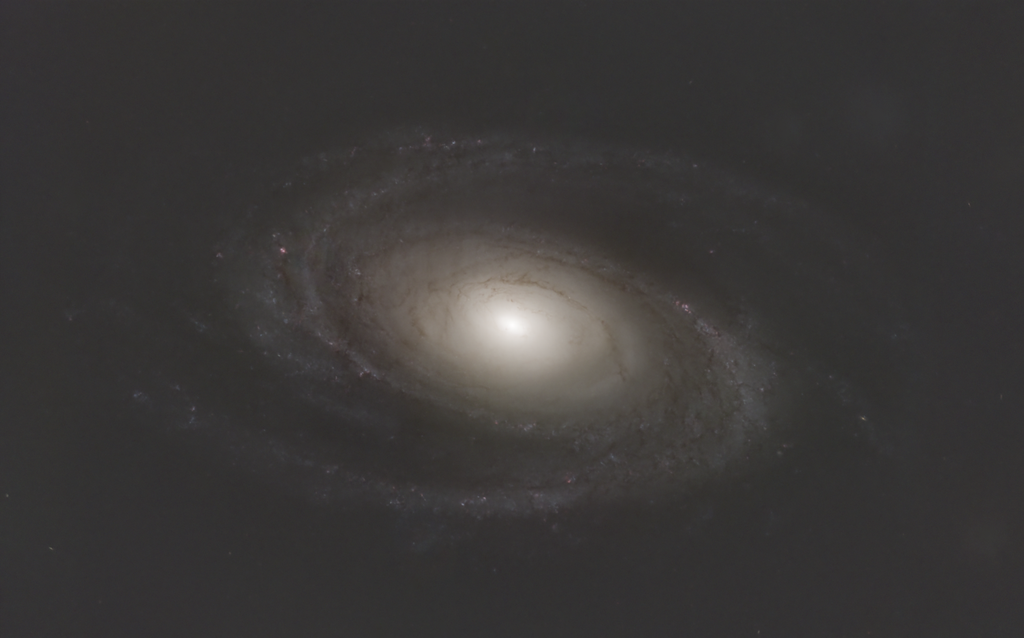

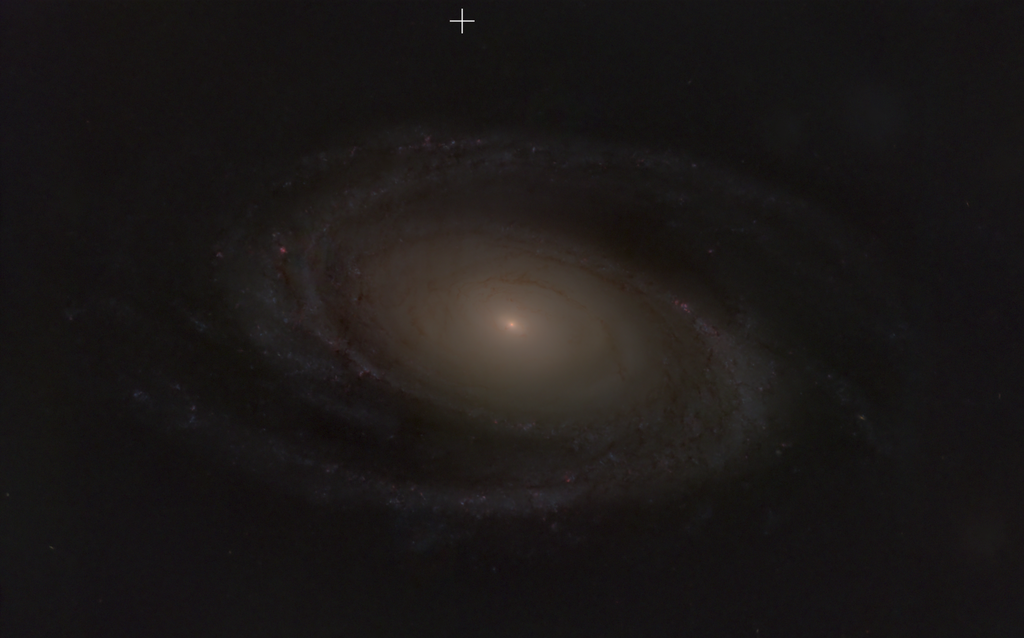

Hi Diva,

I just redid from scratch and this is RGB linear with only PI GradientCorrection and SPCC done:

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jan Erik Vallestad:

Gary Imm:

Wow, Jan Erik, you may be right! Working with Arny's masters, I could never achieve a decent color-calibrated image. I told Arny that I felt there was a calibration issue somewhere in the process. But now switching those 2 channels made all of the difference. Only Arny will know if that is the case, but it is the best explanation so far.

Jan Erik, how did you decide to try that?

I just had a hunch really based on the strange purple hue in the core, the R channel looked right so I had a comparison view of the labelled G and B to see which showed the strongest signal in the core (as it should be more red and green than blue). The channel labeled G has more dust lanes and a bit weaker core as far as I could see. So when the stronger signal gets put into the blue channel it owerpowers the green and it gets purple in stead.

OMG - I must have mixed the filters when I last installed them a while ago :-(

But incredible that you spotted it @Jan Erik V - Thank you!

At least I can remove the dust motes when I swap the filters ...

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

To add, it appears that there is a flat over correction as well as failure to remove some dust motes. Over correction usually occurs when there is a gain/bias mismatch across the the flats, bias and lights.

Thanks Vercastro,

that flats bias issue I need to investigate ...

Arny

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Geoff:

Just using HDRMultiscale transform makes a big difference

wow, quite impressive, Geoff!

Arny

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Geoff:

Just using HDRMultiscale transform makes a big difference

So, I am going to offer my honest opinion here, in hopes it will help you improve.

This is very over-done. Take a look at the version Arny shared. That is a great start, and really, that version wouldn't need much additional processing to make the most of the data that was acquired.

As a reference point for future processing, this image here, pushed the data too far, beyond what it could handle, and the results are breaking down.

Leverage a measured hand, and don't push your processing too far.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

When I see the version that Army shared, I still see residual gradients. That, and the general lack of separation from the background (taken in a heavily light polluted site I would assume) is going to fundamentally limit what you are able to do here. The solution is either to get more data or limit the processing and live with a less deep image.

This ties nicely with some of the points being discussed in some other threads - that stretching is about increasing contrast, and separating closely spaced data values in linear space requires very good signal to noise. In the absence of that, you are forced to do things like over darken the background (to hide gradients) or live with artifacts because the signal separation just ins't there to support the stretch. Processing is not a substitute for good data.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jan Erik Vallestad:

After swapping the B and G channels over I did a quick edit to see how it would turn out. This is by no means a end result as more careful processing could be done with GHS and HDRMT - I just dragged some pixelmath stretching onto it basically. It's quite possible to get something decent from the data.

There we go! That is not bad data for the very light polluted skies it was acquired under. This is a nice result.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

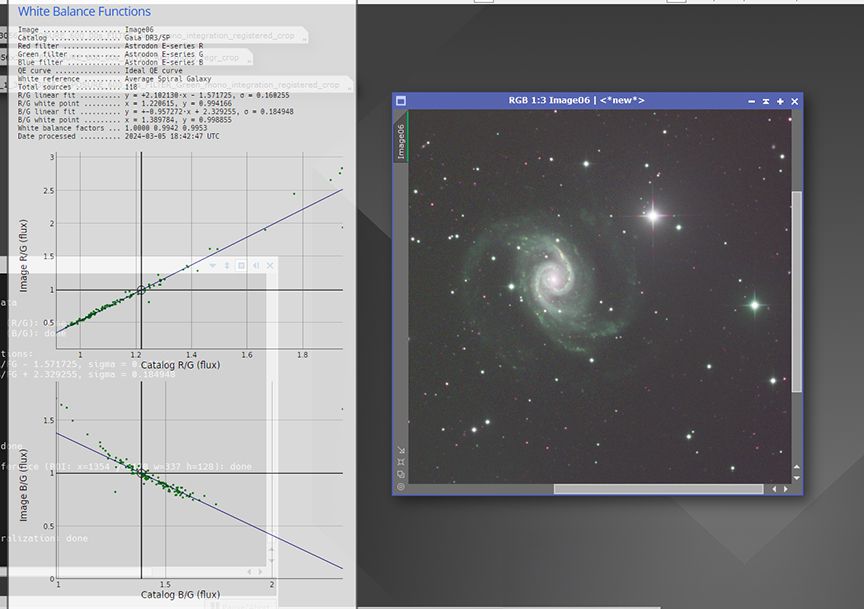

George Hatfield:

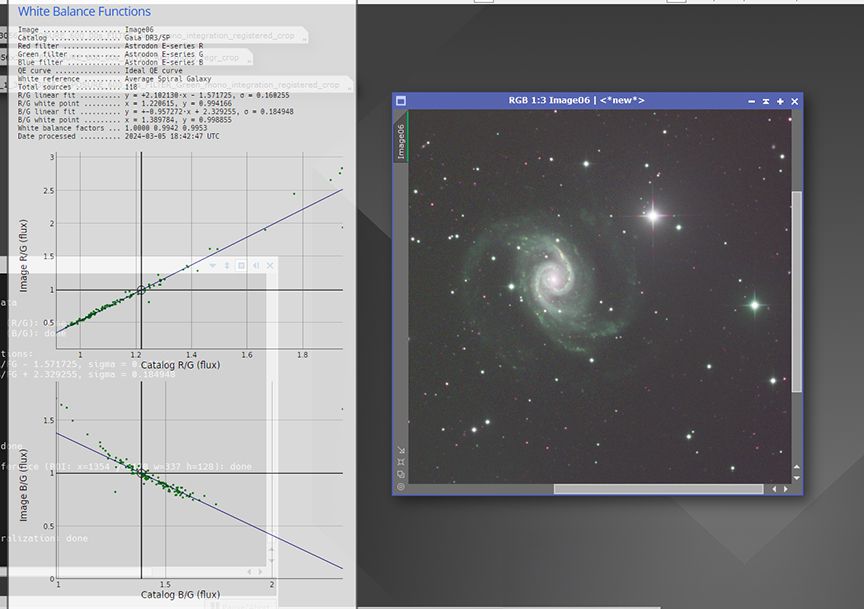

I processed the 300 second R, G and B masters. Before combining to the RGB I ran linearfit with red as the standard since there was a huge difference in the means. I then ran the RGB through GraXpert which seemed to work fine. Then color calibration with SPCC. I used Astrodon filters. I don't think the filter type makes much of a difference. Anyway, the SPCC curves were very unusual... I have not seen them running in opposite directions in any of the images I have processed. Also the central core is way too purple even when calibrated and the arms of the galaxy look sort of "coagulated." Could there be a problem with the WBPP processing? I don't think any amount of processing is going to help this image. I would go back to the very beginning and start over.

George

as I never had the filters right, my SPCC charts always looked weird like this - and yes, the purple core drove me crazy - great you spotted it!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Dear Arny,Yes, definitely, the G-B filters are inverted. Like others, I also conducted a test and found the same results. Here are my findings: I utilized the 180s RGB data and the Ha in a very rushed processing, so it is doable. This below is a print screen from my PI. something better can be done on grasdient and on the stars.I think also the flats are overcorrecting, may you can check there as well the master flat . CS Timo

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

As others have done above, I switched the green and blue masters when creating an RGB and got a similar aberration of colors (too much purple and green) with this image of NGC1566 that I am working on. Also, the SPCC curves showed the same slope reversal for one of the curves.

One question that remains for me is how this mixup occurred. The file names were wrong and looked like something WBPP may have created. Did it somehow misidentify the filters of the subs it was processing?

George

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

George Hatfield:

As others have done above, I switched the green and blue masters when creating an RGB and got a similar aberration of colors (too much purple and green) with this image of NGC1566 that I am working on. Also, the SPCC curves showed the same slope reversal for one of the curves.

One question that remains for me is how this mixup occurred. The file names were wrong and looked like something WBPP may have created. Did it somehow misidentify the filters of the subs it was processing?

George

I believe he mentions that he has swapped them physically, which then leads to the wrong filename.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jan Erik Vallestad:

George Hatfield:

As others have done above, I switched the green and blue masters when creating an RGB and got a similar aberration of colors (too much purple and green) with this image of NGC1566 that I am working on. Also, the SPCC curves showed the same slope reversal for one of the curves.

One question that remains for me is how this mixup occurred. The file names were wrong and looked like something WBPP may have created. Did it somehow misidentify the filters of the subs it was processing?

George

I believe he mentions that he has swapped them physically, which then leads to the wrong filename.

yes, stupid me changed filters after cleaning and putting SHO first in the wheel. Replacing them I must have put them in the order RBG but named them RGB - everything from there forward was a mixup. Only that I believed its normal to have a magenta bias always …

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Timothy Prospero:

Dear Arny,Yes, definitely, the G-B filters are inverted. Like others, I also conducted a test and found the same results. Here are my findings: I utilized the 180s RGB data and the Ha in a very rushed processing, so it is doable. This below is a print screen from my PI. something better can be done on grasdient and on the stars.I think also the flats are overcorrecting, may you can check there as well the master flat . CS Timo

Another great result!

Sounds like the G-B inversion was part of the issue before.

Its often quite helpful to have other imagers take a look and see what they can do, if you are having trouble yourself. Sometimes other people can demonstrate just what is possible with a given data set. Even when the data is not sublime, you can often still make do and produce great images regardless.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jon Rista:

Geoff:

Just using HDRMultiscale transform makes a big difference

So, I am going to offer my honest opinion here, in hopes it will help you improve.

This is very over-done. Take a look at the version Arny shared. That is a great start, and really, that version wouldn't need much additional processing to make the most of the data that was acquired.

As a reference point for future processing, this image here, pushed the data too far, beyond what it could handle, and the results are breaking down.

Leverage a measured hand, and don't push your processing too far.

It was just a quick and dirty process from a screen grab to suggest a way of getting some of the inner details. It was not a serious suggestion of the end result. I should have been clearer about that.

Totally agree with your comments.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Arny:

Wow , some great advice guys - thank you all very much!

common denominator of your advice is to stick more to the basics, use less tools and not to overcorrect and not to clip.

reagarding stretching I saw Ghs and arcsinh as recommendations - any other or even just a manual histogram transformation favored?

GHS gives tighter control and arcsin is supposed to preserve colour. Avoid using them on stars though, it will make them look weird. Stars work best using histogram transformation.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jan Erik Vallestad:

The linear data looks good and sharp, but it comes off quite purple. I wonder if the channels might be mis-labelled? It seemed a lot better when I did RBG (based on the file names) rather than RGB..

Edit: Added a screenshot of the unlinked STF for that particular combination

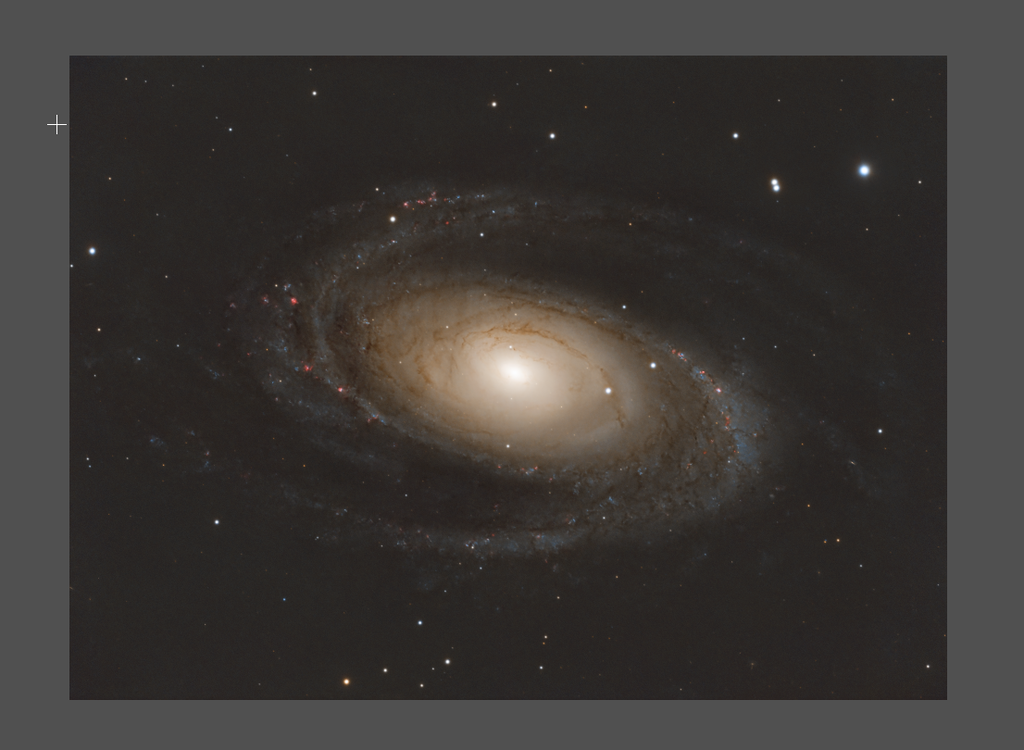

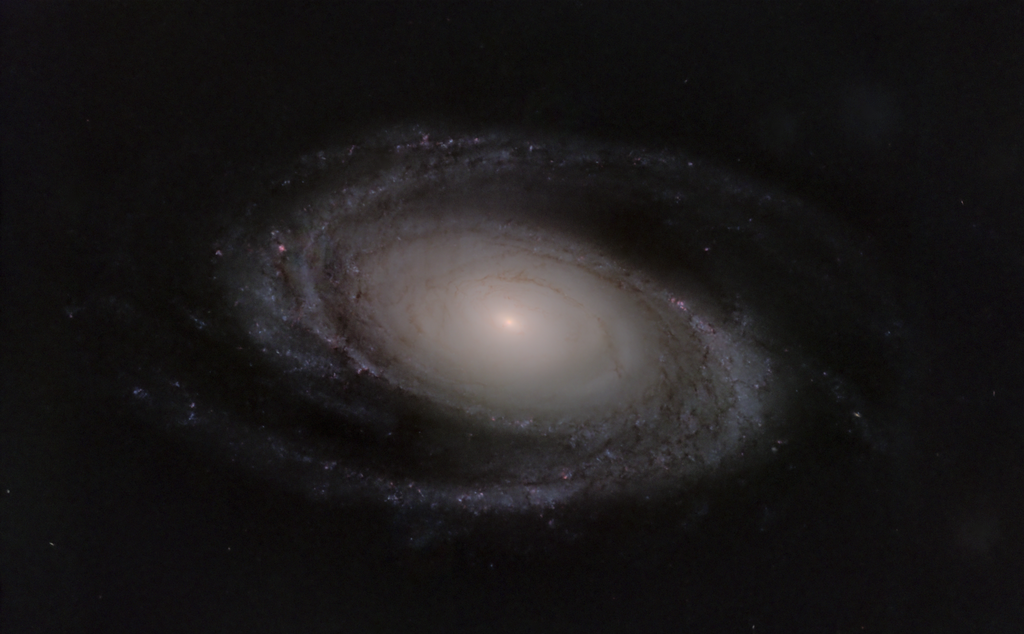

Arny,

I reversed the green and blue as was done above, then did an RGB integration, SPCC, Histogram transformation and used Noise and Blur Xterminator and minor color saturation.

I was wondering if it is possible you have the green and blue filters swapped in your filter wheel after looking at the results here.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

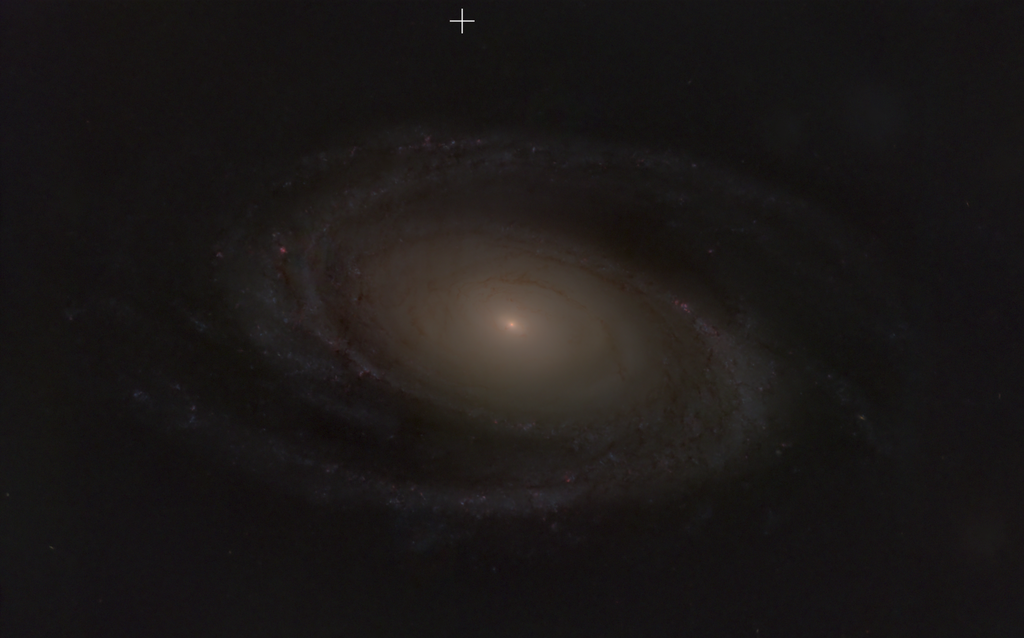

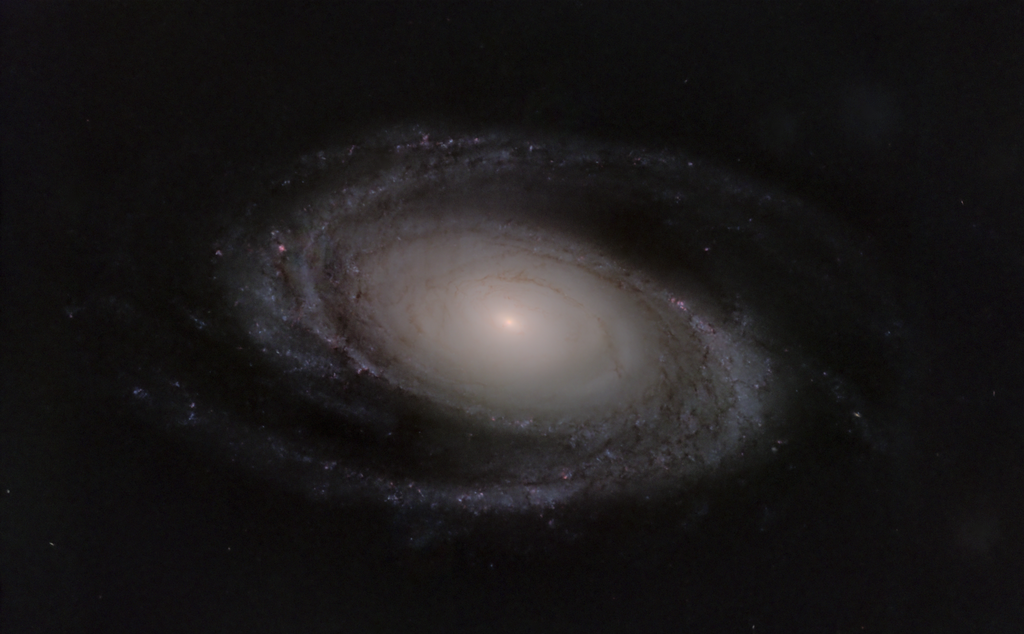

so, I have taken you great advice into account and reprocessed - the result is much more satisfying.

So what did I change?

1. the flats were taken with an LED lamp that led to alternations in intensity by each frame which led to weird master flats.

=> using sky flats now

2. I rechecked (and cleaned :-)) the filters: they were in correct order but I

=> corrected naming

3. let the raw data speak:

=> R,G,B channel only with Graxpert and SPCC looks like this

4. adding ContinuumSubtraction Ha into RGBHa using Toolbox script CombineHaWithRGB

5. BlurXterminator, NoiseXTerminator and StarXterminator

6. ArcSinH and HistogramTrafo Stretch

7. Curves Transformation masking the background to avoid amplification of clipped artefacts

8. Screenblend with the RGBH stars

Observations:

- observing data quality on raw RGB and Ha was a great idea @Jon Rista

- fixing bad flats and its cause was really a big step (and cleaning is a great idea) @vercastro

- understanding the additive color scale and the meaning of a magenta tint caused by swapped B and G was ingenious @Jan Erik V

- very gentle iterations during stretching and curve is key - and also to keep multiple versions for backtracking @Gary Imm

- Ha integration must be very subtle to leave space for stretching later on in the red tone

- stretched image needs space for curves adjustment and looks underwhelming or otherwise overcorrects

- processing in bright light provokes to overcorrect - the darker the better

- merging RGB and Ha after stretch was impossible

Thanks for your help!

Arny

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Arny:

so, I have taken you great advice into account and reprocessed - the result is much more satisfying.

5. BlurXterminator, NoiseXTerminator and StarXterminator

6. ArcSinH and HistogramTrafo Stretch

7. Curves Transformation masking the background to avoid amplification of clipped artefacts

8. Screenblend with the RGBH stars

Observations:

- observing data quality on raw RGB and Ha was a great idea @Jon Rista

- fixing bad flats and its cause was really a big step (and cleaning is a great idea) @vercastro

- understanding the additive color scale and the meaning of a magenta tint caused by swapped B and G was ingenious @Jan Erik V

- very gentle iterations during stretching and curve is key - and also to keep multiple versions for backtracking @Gary Imm

- Ha integration must be very subtle to leave space for stretching later on in the red tone

- stretched image needs space for curves adjustment and looks underwhelming or otherwise overcorrects

- processing in bright light provokes to overcorrect - the darker the better

- merging RGB and Ha after stretch was impossible

Thanks for your help!

Arny

Ok. Definite improvement on the earlier stages! I like where it was going.

Then, step 5. O-BLI-TER-ATION. You absolutely obliterated the noise and the details!!

I think this is one of the key...I don't want to say mistake, because there is a subjective opinion aspect here. But, a key "overprocessing" that I think has become quite common these days. Why the need to obliterate noise? I think that over-smoothing the image like that, is actually a key detracting quality factor. I think it DEFINITELY cost you a non-trivial amount of details, and then I think that loss of detail, and the loss of at least some degree of noise, allowed you to push processing too far in the later steps. The final image IS an improvement over your original...BUT...I think you can still do better! ;)

My recommendation now is, temperance. Find a way to reduce noise, but not totally obliterate it. Become a pixel peeper, and keep an eye on the fine and faint details. Noise reduction should REDUCE, for sure. I think when noise reduction OBLITERATES, its definitely gone too far. See if you can find the balance between the two, that you are satisfied with. A fine level of grain in the image is, IMHO, a pleasing aesthetic factor...at least, I think a fine, low level grain is more aesthetically pleasing than the plasticy-smooth appearance of total obliteration. This is actually not a new issue...people have always had trouble finding that balance, I think, especially as newer imagers. I think it has just become RADICALLY easier to do these days with AI processing tools.

A fine bit of noise, that light weight "grain", is IMO a factor in good image quality, as well as detail preservation.

It is not just noise removal that causes problems. Star removal does as well...I see a lot of artifact issues with star removal, unless it is done VERY carefully. I am wondering if you really need it here for this image, or if a different or more careful kind of stretching might be good enough. It might be worth processing without the SXT, and just NXT and BXT (but with a bit more of a measured hand).

Another thought, and this is more subjective, but, I really liked the color in step 6. I think you lost something in the subsequent steps. If you can maintain the color from step 6 throughout, I think that would be a wonderful image. Even though the arms might be ever so slightly greenish (maybe), I think overall the color is good.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

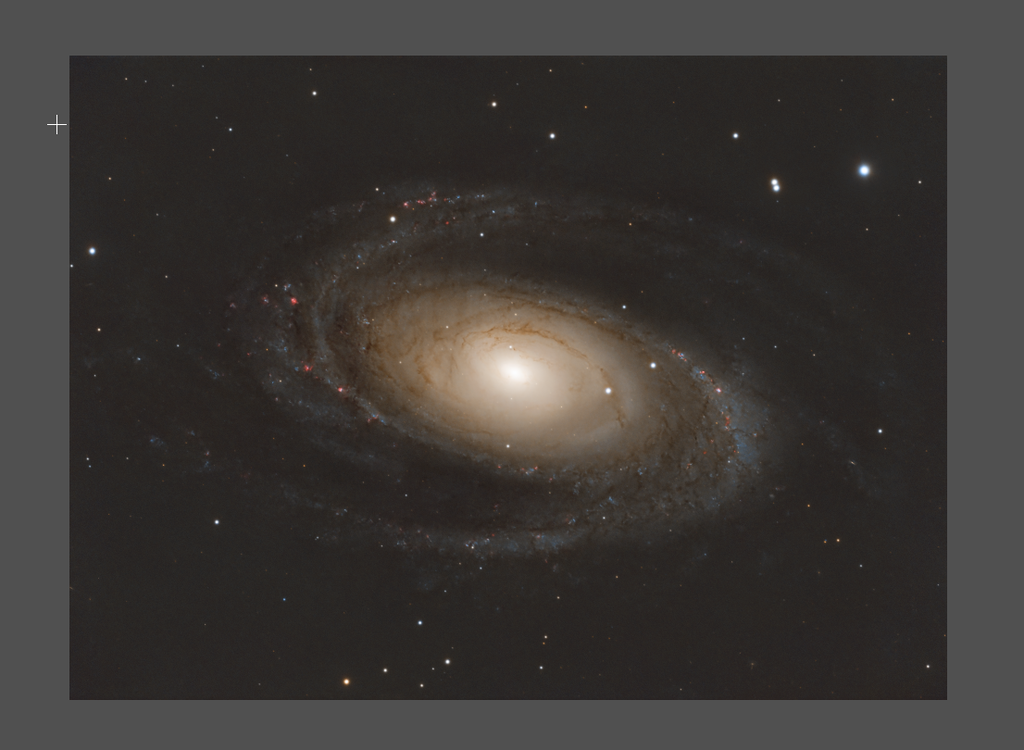

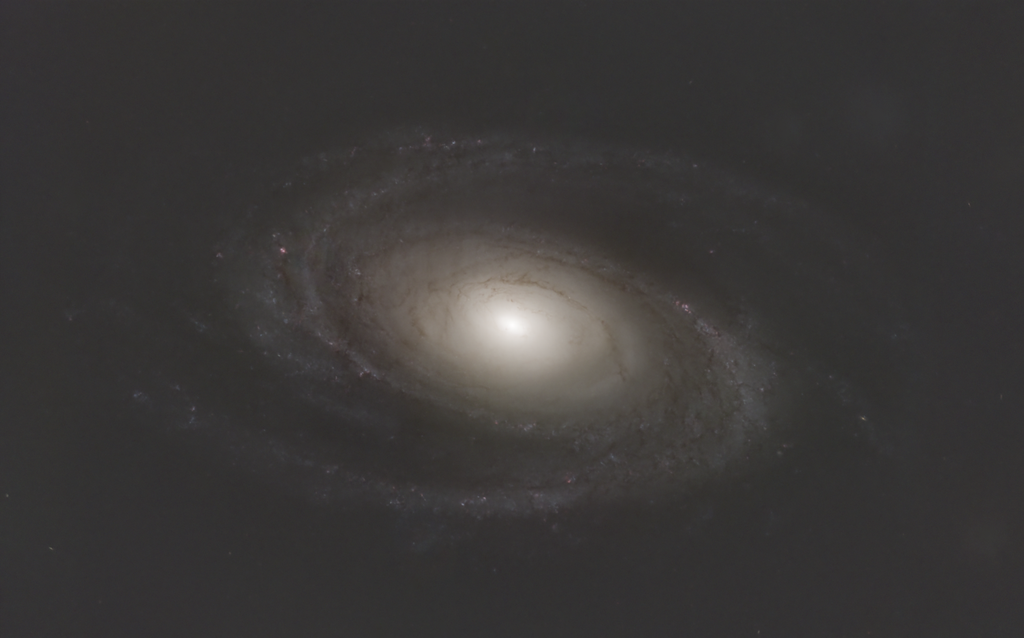

Next processing try :-)

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Arny:

Next processing try :-)

Excellent! Details are looking MUCH better!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

to create to post a reply.