To view and download your file(s), visit:

https://www.sendthisfile.com/icEXmnbepmsuAeBhddqWKvzp

Message:

File(s):

frame_00030.fits

L_00015.fits

One flat frame and one light frame - would a dark and bias help too ?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

To view and download your file(s), visit:

https://www.sendthisfile.com/icEXmnbepmsuAeBhddqWKvzp

Message:

File(s):

frame_00030.fits

L_00015.fits

One flat frame and one light frame - would a dark and bias help too ?

Problem sorted (no dust bunnies no more), see below:

I guess the issue is in how you take and process your flats.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

To view and download your file(s), visit:

https://www.sendthisfile.com/icEXmnbepmsuAeBhddqWKvzp

Message:

File(s):

frame_00030.fits

L_00015.fits

One flat frame and one light frame - would a dark and bias help too ?

Problem sorted (no dust bunnies no more), see below:

I guess the issue is in how you take and process your flats.

FWIW, this could be sample bias. Some subs may be having problems while most do not. To the OP, its worth evaluating your lights to make sure that some of them don't fall out of the norm.

Otherwise, I have always been suspicious of WBPP, and BPP before, and I always do my own pre-processing manually to make sure I never run afoul of any quirky issues with the black box.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jon Rista:

andrea tasselli:

To view and download your file(s), visit:

https://www.sendthisfile.com/icEXmnbepmsuAeBhddqWKvzp

Message:

File(s):

frame_00030.fits

L_00015.fits

One flat frame and one light frame - would a dark and bias help too ?

Problem sorted (no dust bunnies no more), see below:

I guess the issue is in how you take and process your flats.

FWIW, this could be sample bias. Some subs may be having problems while most do not. To the OP, its worth evaluating your lights to make sure that some of them don't fall out of the norm.

Otherwise, I have always been suspicious of WBPP, and BPP before, and I always do my own pre-processing manually to make sure I never run afoul of any quirky issues with the black box.

I had to modify the flat, so no lucky strike. But I'm still waiting for the dark, dark-flat and bias so I reserve my judgment till then.

But, yes, I'd need a wider sample then just one throw. Let's see what the OP might come up with.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

Jon Rista:

andrea tasselli:

To view and download your file(s), visit:

https://www.sendthisfile.com/icEXmnbepmsuAeBhddqWKvzp

Message:

File(s):

frame_00030.fits

L_00015.fits

One flat frame and one light frame - would a dark and bias help too ?

Problem sorted (no dust bunnies no more), see below:

I guess the issue is in how you take and process your flats.

FWIW, this could be sample bias. Some subs may be having problems while most do not. To the OP, its worth evaluating your lights to make sure that some of them don't fall out of the norm.

Otherwise, I have always been suspicious of WBPP, and BPP before, and I always do my own pre-processing manually to make sure I never run afoul of any quirky issues with the black box.

I had to modify the flat, so no lucky strike. But I'm still waiting for the dark, dark-flat and bias so I reserve my judgment till then.

But, yes, I'd need a wider sample then just one throw. Let's see what the OP might come up with.

Wow - like to knoe what you did but here is a dark and a flat

To view and download your file(s), visit:

https://www.sendthisfile.com/fFzcFpZNbpEXMQMpRzVebjXy

Message:

File(s):

0 25_00015.fits

300 0 25_00015.fits

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Now, flat-dark and dark look normal so no surprise there (basically just pedestal there). Now, to see whether the approach works across the board I'd need three other random lights but chosen as far apart as possible, ideally at the beginning, in the middle or thereabout and at the end of the session. If everything works you#ll have a procedure to get rid of the gremlins but ideally you'd like to sort out the issue at the source.

BTW, what I'm doing you can't do with WBPP.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

|

I'm with Andrea here...when you run into troubles like this, it is best to ditch WBPP, and work through the process manually. WBPP is basically a black box...you have some minimal insight into exactly what its doing through a few "knobs" and "dials" but you have little real control. When it comes to troubleshooting, its a good idea to eliminate that black box, as it itself is often the problem. If you continue to have problems while pre-processing manually, its usually a lot easier to troubleshoot why, and what to do to resolve the issues, when pre-processing manually.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

Now, flat-dark and dark look normal so no surprise there (basically just pedestal there). Now, to see whether the approach works across the board I'd need three other random lights but chosen as far apart as possible, ideally at the beginning, in the middle or thereabout and at the end of the session. If everything works you#ll have a procedure to get rid of the gremlins but ideally you'd like to sort out the issue at the source.

BTW, what I'm doing you can't do with WBPP.

*OK; here are 1, 10, and 20 (and you already have 30) -

To view and download your file(s), visit:

https://www.sendthisfile.com/KyHrjyeqFEvZZCqOpEvNuqYi

Message:

File(s):

frame_00020.fits

frame_00010.fits

frame_00001.fits

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

I wouldn’t be inclined to call WBPP a “black box,” as it is just configuring and scripting the actual individual processes in PixInsight, and you can review what settings are applied in doing so.

But at the same time, I believe that makes the sentiment of being able to troubleshoot and operate outside WBPP all the more important. Not only does that give access to all settings in the individual processes, it allows for much more rapid troubleshooting and experimentation. And a good understanding of how to use all the processes independently is quite valuable for making the most of choosing configuration options inside WBPP to get the desired results, and being able to troubleshoot and address a problem when it does come up (i.e. turning WBPP into something intelligible as opposed to a black box).

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

BTW, what I'm doing you can't do with WBPP.

I just tried this with Image Calibration and got far far better results - is this the idea? But isn't this supposed to be contained within WBPP?

Update: when I then integrated the ten test frames (which seemed quite clean individually ) some of the motes became visible again - strange!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

*OK; here are 1, 10, and 20 (and you already have 30) -

To view and download your file(s), visit:

https://www.sendthisfile.com/KyHrjyeqFEvZZCqOpEvNuqYi

Message:

File(s):

frame_00020.fits

frame_00010.fits

frame_00001.fits

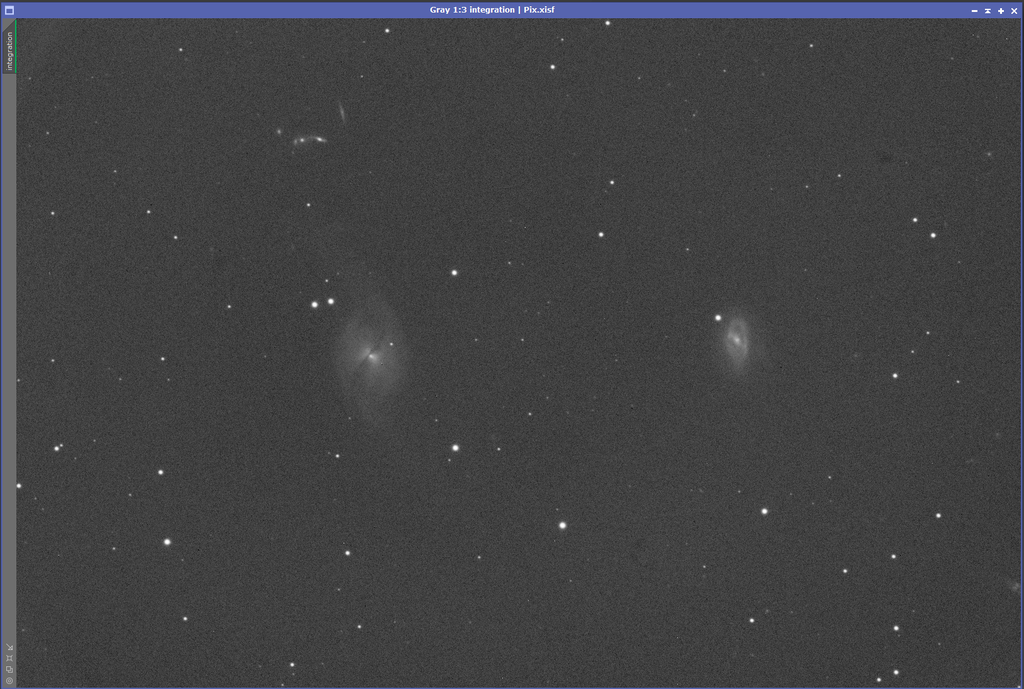

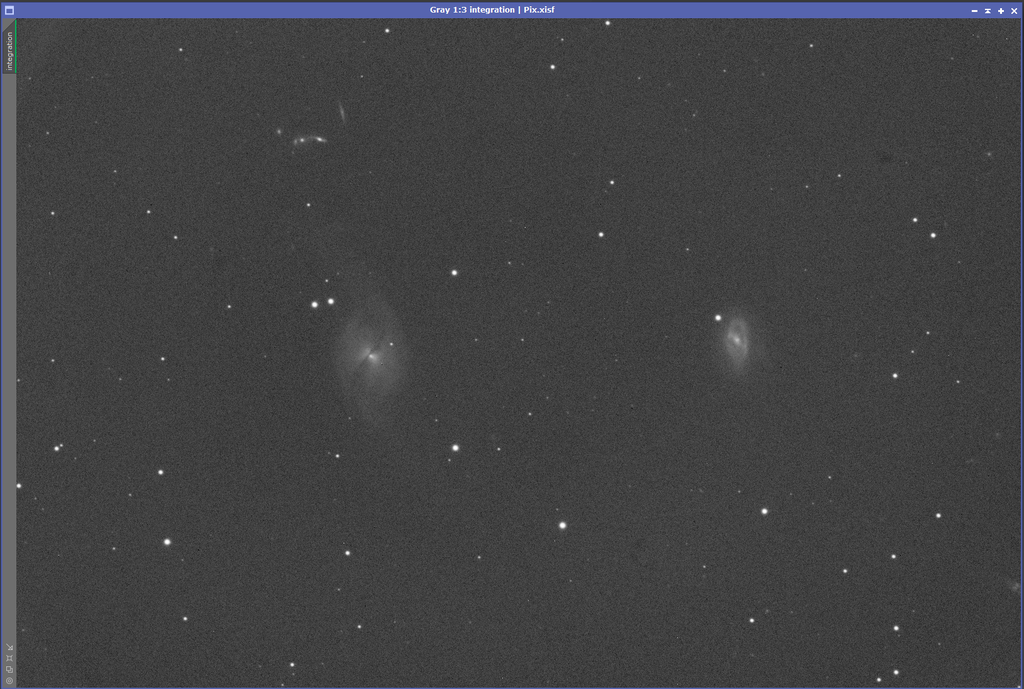

OK, here is the run down: mirror flop is causing some but not all of your grief. To see whether the issue was of additive or multiplicative (light leak) nature I applied LinearFit to the supplied flat frame using the last of the frame of the series. That frame calibrated then perfectly, as seen in the previous post of mine. Now I used the same modified flat frame on all the samples from the sequence, the results shown here below:

There are very marginal residuals that progressively disappear, because of different illumination of very close dust motes and severe clipping at the edges due to vignetting (this is mirror flop).

More to follow...

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

James Peirce:

I wouldn’t be inclined to call WBPP a “black box,” as it is just configuring and scripting the actual individual processes in PixInsight, and you can review what settings are applied in doing so.

But at the same time, I believe that makes the sentiment of being able to troubleshoot and operate outside WBPP all the more important. Not only does that give access to all settings in the individual processes, it allows for much more rapid troubleshooting and experimentation. And a good understanding of how to use all the processes independently is quite valuable for making the most of choosing configuration options inside WBPP to get the desired results, and being able to troubleshoot and address a problem when it does come up (i.e. turning WBPP into something intelligible as opposed to a black box).

Perhaps a better term is a "closed box." The key being, you have limited control with WBPP, whereas you have full control if you manually pre-process.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

BTW, what I'm doing you can't do with WBPP.

I just tried this with Image Calibration and got far far better results - is this the idea? But isn't this supposed to be contained within WBPP?

WBPP exposes minimal control. Once you get into the individual PI processes, you have full control. You don't have dark optimization enabled here, which I think is the default, but it is enabled in WBPP by default usually. That may be one of the differences. You also have control over the output pedestal. I am not sure what WBPP does there by default, but this can be a useful tool if you run into certain kinds of problems during pre-processing, so this would be something you have direct and flexible control over.

Are you using a master flat created by WBPP? Or did you calibrate and integrate your own master flat? (Same would go for the master bias and master dark.)

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jon Rista:

Are you using a master flat created by WBPP? Or did you calibrate and integrate your own master flat? (Same would go for the master bias and master dark.)

For this test I actually used the masters that WBPP had created earlier - I didn't expect much but it made a big difference - I gusee I had better study up on the manual approach ..

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

There are very marginal residuals that progressively disappear, because of different illumination of very close dust motes and severe clipping at the edges due to vignetting (this is mirror flop).

Many thanks for this analysis!!

I didn't realize that mirror flop could contribute to this type of problem - I do have an Optec Secondary Focuser but I've been waiting for some better weather before installing it. Seems that I should accelerate that job. Then I can lock the primary (hopefully!) It also seems that I should dig into manual preprocessing further as well. Looking forward to your "more to follow" comments!

Thanks again!

Pete

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jon Rista:

Are you using a master flat created by WBPP? Or did you calibrate and integrate your own master flat? (Same would go for the master bias and master dark.)

For this test I actually used the masters that WBPP had created earlier - I didn't expect much but it made a big difference - I gusee I had better study up on the manual approach ..

Its pretty strait forward. Unless you strictly know you need dark flats, just put all the individual flat frames into ImageCalibration. Reference the master bias, DO NOT include a master dark or master flat. Disable the output pedestal, no need for it with flats. The signal and noise evaluation sections are new to me, as long as they don't change what calibration does, you can leave them checked (I suspect they will just output some details like what the SNR is in the output report for each frame).

Once you have all your calibrated flat frames, move over to ImageIntegration. Bring all the calibrated flat frames in. Integrate with simple averaging, Multiplicative scaling (normalization). Don't weight. For pixel rejection, use percentile clipping, equalize fluxes, and for percentile high and low, use a value like 0.02 (which should be a medium level...you might be able to go even larger, unless you have notable factors in your flats like airplane trails or star trails or something like that, in which case maybe use 0.015 or 0.01.

When ImageIntegration is done, you'll have your master flat.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jon Rista:

James Peirce:

I wouldn’t be inclined to call WBPP a “black box,” as it is just configuring and scripting the actual individual processes in PixInsight, and you can review what settings are applied in doing so.

But at the same time, I believe that makes the sentiment of being able to troubleshoot and operate outside WBPP all the more important. Not only does that give access to all settings in the individual processes, it allows for much more rapid troubleshooting and experimentation. And a good understanding of how to use all the processes independently is quite valuable for making the most of choosing configuration options inside WBPP to get the desired results, and being able to troubleshoot and address a problem when it does come up (i.e. turning WBPP into something intelligible as opposed to a black box).

Perhaps a better term is a "closed box." The key being, you have limited control with WBPP, whereas you have full control if you manually pre-process.

Spot on as far as I’m concerned.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

...Following from my previous post

As I was writing, because my reference light used to recalibrate the (master?) flat is the last in the series the small differences due to variation of illumination lead to some residuals showing up when the 4 lights are calibrated and integrated, as shown below:

Using Graxpert to remove the obvious LP bias yields:

It can easily be seen that there are blemishes still left around the frame (for the reasons mentioned above).

So a further step might be needed and then conclusions shall follow...

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jon Rista:

Jon Rista:

Are you using a master flat created by WBPP? Or did you calibrate and integrate your own master flat? (Same would go for the master bias and master dark.)

For this test I actually used the masters that WBPP had created earlier - I didn't expect much but it made a big difference - I gusee I had better study up on the manual approach ..

Its pretty strait forward. Unless you strictly know you need dark flats, just put all the individual flat frames into ImageCalibration. Reference the master bias, DO NOT include a master dark or master flat. Disable the output pedestal, no need for it with flats. The signal and noise evaluation sections are new to me, as long as they don't change what calibration does, you can leave them checked (I suspect they will just output some details like what the SNR is in the output report for each frame).

Once you have all your calibrated flat frames, move over to ImageIntegration. Bring all the calibrated flat frames in. Integrate with simple averaging, Multiplicative scaling (normalization). Don't weight. For pixel rejection, use percentile clipping, equalize fluxes, and for percentile high and low, use a value like 0.02 (which should be a medium level...you might be able to go even larger, unless you have notable factors in your flats like airplane trails or star trails or something like that, in which case maybe use 0.015 or 0.01.

When ImageIntegration is done, you'll have your master flat.

I'm going to give it a whirl; thanks!

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Using LinearFit this time using each instance as the reference yields small further improvements (however integration over the full series should likely give better results):

First conclusion:

Flats should really be tailored to be close to your mean frame value. This used to be the wisdom passed down when I started in digital AP more than 25 years ago and, as far s the specific of this situation apply (high LP and luminance filter), I think this is still a valid point. The mean frame value of the lights is around 1,000 ADUs but the equivalent value for the flat is around 30,700 ADUs, that is 30x as much. In integer math this alone would be frowned upon.

Second conclusion:

The is very little or no light leaks in the optical train as otherwise this whole exercise would have come to nought.

Third conclusion:

The strongest residuals are coming from very close dust motes and severe clipping of the marginal rays due to aperture vignetting. While there is nothing it can be done about the latter cleaning the window of the camera (with anti-static optical cleaning fluids) probably is something worth pursuing.

Way forward: Use LinearFit of the middle frame of the series applied to the master flat to recalibrate the whole series. Just remember that you need to remove the master (and DO NOT OPTIMIZE) dark from each light and THEN use the dark-calibrated light to re=calibrated the original flat (Also NO BIAS subtraction). So ImageCalibration needs to run twice; once to obtain the dark-calibrated lights and then to flatten the same lights. Be careful in the naming strategy and keep them file streams separate. The rest of the pre-processing routine can run as usual. Do not forget to run LocalNormalization. Obvioulsy, but no WBPP.

I'm knackered...

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Very nice work, Andrea.

It is good to be part of a thread where the problem posed is solved and we all learn something in the process.

I do have a question relating to the below statement:

The mean frame value of the lights is around 1,000 ADUs but the equivalent value for the flat is around 30,700 ADUs, that is 30x as much. In integer math this alone would be frowned upon.

Since the sensor is well behaved and linear, and since the flat is divided by its median value, why does this difference matter, so long as no pixels in the flat are saturated? It seems to me that the LinearFit process you describe simply scales the flat, but since the flat is then rescaled by its median, this step should not, in floating point arithmetic, matter at all.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

First conclusion:

Flats should really be tailored to be close to your mean frame value. This used to be the wisdom passed down when I started in digital AP more than 25 years ago and, as far s the specific of this situation apply (high LP and luminance filter), I think this is still a valid point. The mean frame value of the lights is around 1,000 ADUs but the equivalent value for the flat is around 30,700 ADUs, that is 30x as much. In integer math this alone would be frowned upon.

Could you explain this more? I've actually done this in the past, and I always had problems with it. Sometimes it was a combined issue with insufficiently exposed lights and flats. Flats are divided out, not subtracted or added, so I am not sure why a 30k ADU level would be mathematically problematic.

With CMOS cameras, a lot of the time, the signal level is only a few tens of ADU, which may not be sufficient for flats to fully and effectively model the full shape of the field. Further, flats, more than anything, are intended to correct FPN due to PRNU. This is the reason for the (apparently contentious topic) of always matching the gain setting with flats. Without a gain match, then the PRNU is usually going to be different. With an otherwise perfect field, no vignetting or other shading from say dust motes, you would still want to calibrate with a master flat to correct PRNU.

Some people argue that correcting PRNU doesn't really matter. When people were stacking a mere 10-15 ultra long light frames back in the CCD days, I would have agreed, but these days with people often stacking hundreds, I think it matters more (not to mention PRNU is often higher on these cameras, especially with the lower bit depths of 12 or 14 bits.) There may not be a measurable difference between certain gains, whereas there can often be a very notable difference between other gains (i.e. HCG mode vs. not, or Gan 0 and a fairly high gain). Its easy enough to match gain, and it can eliminate potential problems that might exist if you don't match gain.

So the primary reason to use a flat, is to correct PRNU, then secondarily to correct field structure/shading. I've worked with many cameras that have rather rogue PRNU. It is not always spatially random, it can be quite notable:

![gzyeL56[1].jpg](https://cdn.astrobin.com/ckeditor-thumbs/7253/2024/16aea973-90a6-4691-816c-2654e3267973.jpg)

A close up crop demonstrates the notable structure in the PRNU pattern:

![9EoZ7cH[1].jpg](https://cdn.astrobin.com/ckeditor-thumbs/7253/2024/2b3e5f44-814b-4e92-b302-3cbfe32fdb1f.jpg)

This field structure, after a fair amount of testing, proved to be the intrinsic PRNU-derived FPN of the sensor. It was not due to frosting or dew, or any kind of large-scale dust motes (i.e. the flatter region near the center-right), etc. There is of course some vignetting here, but the structures, are all from the sensor. Some sensors may be very very clean and not exhibit such structure...that doesn't mean they don't have PRNU, or that it isn't large enough in scale that it should be corrected by flats. In fact, I think most of the time, a super clean field and noise profile in flat frames can often lull people into a false sense of security with regards to whether they should be doing optimal flat calibration (i.e. matched gain and sufficient exposure to fully model all the field factors that may be present) or not.

It is the safer path to just make sure you always match the gain (easy enough)...but, I do think that getting sufficient exposure is also important. Exposing flats to the same median level as the lights can be problematic in my experience, and such a master won't necessarily and effectively model field structure or PRNU best, or correct it optimally. Most of the time this exhibits in the darkest parts of vignetted corners, but I've had issues manifest elsewhere at times.

From a scientific standpoint, or from a PTC generation standpoint, the recommendation has always been to expose your flat frames to a middling ADU level, around 30-50% of the range, to make sure they are squarely in the linear range of the sensor. Another thing that scientific literature often espouses, is getting a rather massive amount of total ADU in the master flat...to the tune of around 100 million. I may have a link to the paper that explains this...

Another factor that I've encountered with proper flat fielding, is that your lights should also be sufficiently into the linear range of the sensor. In the past I've worked with people who had some very strange problems with flat fielding, and it was due to insufficient exposure. Portions of the frame that were most heavily shaded, outside of star signals, actually were getting no object or background sky signal at all. Flats simply could not properly correct such lights. Once the lights were exposed properly, such that the more heavily shaded regions (mainly within the corner vignette) were exposed to a more reasonable criteria like 10xRN^2, the flat fielding problems went away.

Such has been my experience with flat correction...

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

First conclusion:

Flats should really be tailored to be close to your mean frame value. This used to be the wisdom passed down when I started in digital AP more than 25 years ago and, as far s the specific of this situation apply (high LP and luminance filter), I think this is still a valid point. The mean frame value of the lights is around 1,000 ADUs but the equivalent value for the flat is around 30,700 ADUs, that is 30x as much. In integer math this alone would be frowned upon.

Second conclusion:

The is very little or no light leaks in the optical train as otherwise this whole exercise would have come to nought.

Third conclusion:

The strongest residuals are coming from very close dust motes and severe clipping of the marginal rays due to aperture vignetting. While there is nothing it can be done about the latter cleaning the window of the camera (with anti-static optical cleaning fluids) probably is something worth pursuing.

Way forward: Use LinearFit of the middle frame of the series applied to the master flat to recalibrate the whole series. Just remember that you need to remove the master (and DO NOT OPTIMIZE) dark from each light and THEN use the dark-calibrated light to re=calibrated the original flat (Also NO BIAS subtraction). So ImageCalibration needs to run twice; once to obtain the dark-calibrated lights and then to flatten the same lights. Be careful in the naming strategy and keep them file streams separate. The rest of the pre-processing routine can run as usual. Do not forget to run LocalNormalization. Obvioulsy, but no WBPP.

I'm knackered...

Much appreciated! Thanks!

I'll try to follow those guidelines and see how it goes; just an aside, I wonder if some of the corner vignetting that you're seeing is the actual physical cut off of the ASI6200 as it mounts to the filter wheel? It's plain to see in all light and flat frames; it's bizzaire, but all four corners are actually slightly cut off, even thought both devices are ZWO.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Arun H:

Very nice work, Andrea.

It is good to be part of a thread where the problem posed is solved and we all learn something in the process.

I do have a question relating to the below statement:

The mean frame value of the lights is around 1,000 ADUs but the equivalent value for the flat is around 30,700 ADUs, that is 30x as much. In integer math this alone would be frowned upon.

Since the sensor is well behaved and linear, and since the flat is divided by its median value, why does this difference matter, so long as no pixels in the flat are saturated? It seems to me that the LinearFit process you describe simply scales the flat, but since the flat is then rescaled by its median, this step should not, in floating point arithmetic, matter at all.

Good question, Arun. But is it floating point arithmetic or is it not? In the days of yore it would have been integer arithmetic all the way but now should be FP all the way, is it not? In theory it shouldn't matter as long as the flat signal is not weaker than the mean frame value it is meant to correct but since the operation being carried out is to renormalize by the flat then the absolute value is (or should) be immaterial.

But what if the sensor in question is ever so slightly non-linear and what if this non-linearity depends on gain? This is just speculation from my part and difficult to test in non-laboratory conditions but I might have a go at it.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

to create to post a reply.

![gzyeL56[1].jpg](https://cdn.astrobin.com/ckeditor-thumbs/7253/2024/16aea973-90a6-4691-816c-2654e3267973.jpg)

![9EoZ7cH[1].jpg](https://cdn.astrobin.com/ckeditor-thumbs/7253/2024/2b3e5f44-814b-4e92-b302-3cbfe32fdb1f.jpg)